Is AI Replacing Jobs Right Now? Analysis by Age Appears to Have Challenges.

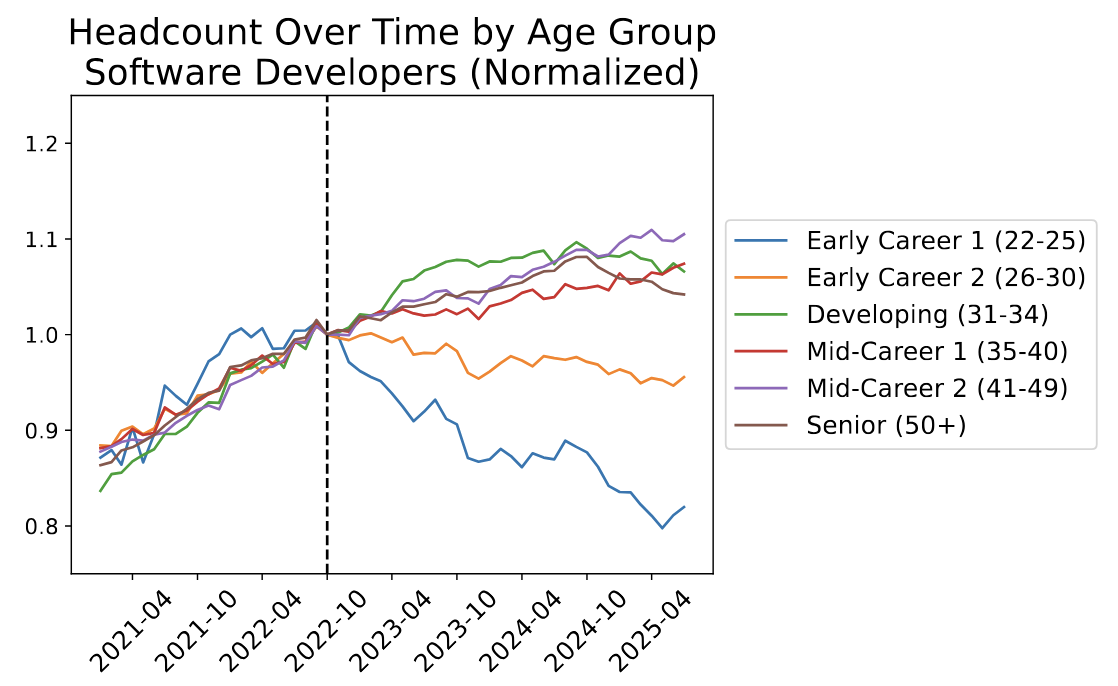

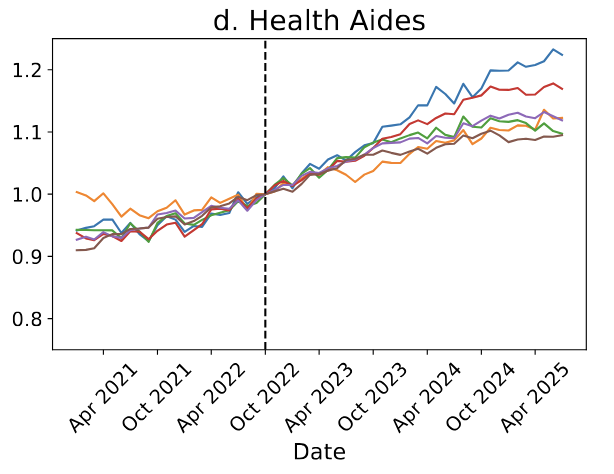

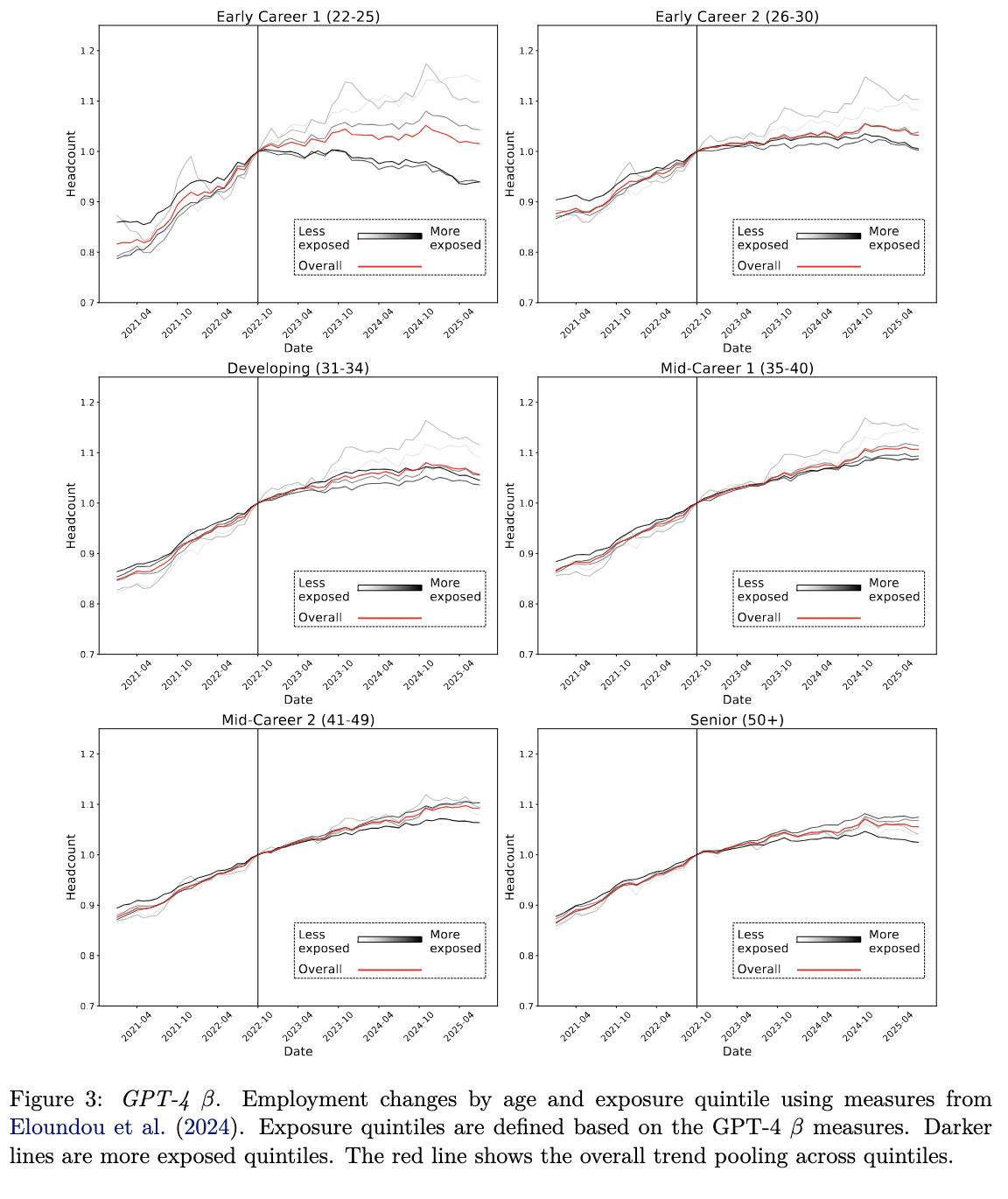

By David Gros. Last week a fascinating working paper came out of the Digital Economy Lab at Stanford studying impacts of AI on the labor market. AI unemployment angst grabs attention, and key results of the study were picked up by major outlets and around social media. How much do these alarming headlines hold up when digging into the paper? While I share the motivating concern of the articles (AI will probably automate most tasks and jobs in the coming decades, a view shared by many fellow “AI experts” (Grace et al., 2024)), the claims of the headlines might require deeper analysis and discussion. Here we’re going to explore the way the study reached this headline: loosely expressed that since the release of ChatGPT, AI has resulted in a ~13% drop in entry-level employment in areas most exposed to AI disruption (such as software jobs), and that this early-career employment drop is a surprising “canary” pointing to larger AI-driven patterns. Then we are going to think through two potential confounders that could deserve additional attention: Before getting to those questions, let’s understand a bit more of the study. The paper uses a really neat dataset. They get data from ADP, a maker of payroll software. After some filtering, this dataset has key data points for approximately 4 million American workers. For each job in the filtered data, there is a job title, which gets standardized into categories like “Software Developer”. The authors connect these job categories to “GPT-4 β exposure“ values, which are numbers from prior work by Eloundou et al. (2023) from OpenAI which approximates how automatable job types are by LLM systems (we subsequently refer to this as a job's β). The ADP data also has the birth year of every worker. They randomly choose a birth month for each person, and then calculate their age. They interpret age as a proxy for the experience of the worker, and organize workers into age brackets running from “Early Career 1 (22-25)” to “Senior (50+)”. This gives all the components for the main results. They highlight how jobs with high GPT-4 exposure β like software developers saw a decline of the lower age brackets while the higher age brackets stayed the same or increased. Grabbing from their Figure 1: Meanwhile a lower β job like Health Aides do not have this pattern. Grabbing from their Figure 2: Looking at quintiles of job β they note a similar trend. Here we quote their conclusions to summarize key claims of the paper: We document six facts about the recent labor market effects of artificial intelligence: • First, we find substantial declines in employment for early-career workers in occupations most exposed to AI, such as software development and customer support. • Second, we show that economy-wide employment continues to grow, but employment growth for young workers has been stagnant. • Third, entry-level employment has declined in applications of AI that automate work, with muted effects for those that augment it. • Fourth, these employment declines remain after conditioning on firm-time effects, with a 13% relative employment decline for young workers in the most exposed occupations. • Fifth, these labor market adjustments are more visible in employment than in compensation. • Sixth, we find that these patterns hold in occupations unaffected by remote work and across various alternative sample constructions. While our main estimates may be influenced by factors other than generative AI, our results are consistent with the hypothesis that generative AI has begun to significantly affect entry-level employment. We’re glossing over a lot of details here. We refer back to the full paper for more, but these are the key details needed to get into potential nuance, and discuss why many of these conclusions seem confusing. The study divides workers into age brackets. There are few ways to do this, but it appears the most sensible read of the paper implies they recalculate the brackets at every time step. They normalize the counts for each bracket to 1.0 is the number of jobs in that bracket in Nov 2022. This methodology appears to have concerning implications. The headline result is the 13% decline in the 22-25 Early Career bracket. However, notably, this bracket is only 4 years wide and will be most affected by slow hiring. Imagine a case where hiring completely froze across all ages without any layoffs. The youngest bracket would naturally lose approximately a quarter of its population each year as 25yos aged into the 26-30yo bracket. To illustrate we plot a simulation of a complete hiring freeze of the Software Developers (a high β job). In this scenario there are no layoffs, but existing workers age over time. We don’t have access to the Brynjolfsson et al.'s data and they do not report the non-normalized counts, so we approximate the starting demographics using data from the Bureau of Labor and Statistics Current Population Survey (BLS CPS) (Flood et al., 2024) averaging the monthly data across all of 2024. The CPS data interviews 60,000 households per month (only small fraction have any given job), thus this data is very noisy for this use case, but gives an approximate age demographic curve. In this extreme full hiring freeze, the “Early Career” bracket drops about 64% as the demographic moves up. There was not a complete hiring freeze post-ChatGPT. However, for reasons not attributable to AI (as discussed below), there might have been slower hiring than in 2022. Any slow will naturally affect the youngest bracket the most, and we should avoid excessively inferring from this. Demographic differences between jobs might exaggerate or soften this effect. Bottom Line: Early career positions might be declining in high β sectors. However, the data might not imply connection between AI and employee experience as claimed, or that the market for a 22yo in high GPT-4-β roles is more suppressed than for a 35yo, but instead reflect a property of the data as there is no natural aging into 22yo spots like there is for 35yo spots. Alternative approach?: There are potentially simple ways to avoid these problems. An alternative more robust approach would be to fix employees' age at every time series point to be calculated as the employee age in November 2022 to keep workers in fixed cohorts. This would mostly eliminate the “aging out” issue, and might yield different trends. While the authors look at total employment counts, depending on what metadata is available, one could also focus on new hires or layoffs. I'll stress that it is very possible that the study already uses some more robust way than monthly recalculating age brackets (in which case my whole section here is moot). However, I believe the most sensible read implies they recalculate at each step. I reached out to the authors to clarify this. Regardless, we hope this discussion encourages the authors or similar future work to add language to clarify the age bracketing approach and the implications. We do not dispute that employment in high β areas could be slowing, but can it be directly attributed to AI as many headlines try to imply? The paper tries to acknowledge a few alternative hypotheses, and takes some steps to explore them. Some of the explored alternative hypotheses are around remote work, tech jobs, and certain firms doing excessive layoffs at a given time point. However, the paper could possibly devote more discussion to the peculiar state of the labor market during the Jan 2021 to July 2025 studied time period. Jan 2021 was not a normal time due to the macro effects of COVID. During COVID many “low-computer real world” jobs shut down, and with free money of near zero interest rates, there was increased investment in computer-centric jobs. Thus an alternative hypothesis is that this led to overhiring in high β jobs, which was corrected post-ChatGPT as interest rates rose and the set of available jobs reset. Here we show a 6-month rolling average of the CPS data for software developers compared to health aides. This gives an example of how a job like Software Developer might have been already elevated in November 2022, while other roles were declined from pre-COVID. This data is noisy, so we view this as weak evidence. We also attempted to explore quintiles over time via CPS data. However, the process of matching the Eloundou et al. GPT-4-β task-level values to CPS data is messy, and in a quick study I am not confident I am doing it in the same way as Brynjolfsson et al. Preliminary estimate here does not show noticeable over- or under-hiring that cleanly splits. More exploration is needed, ideally with the higher quality data the authors have access to. The authors use a fixed-effect model as part of their argument. It takes a bit of time to think through the mechanics/implications of this model, but it does not appear to be able to handle these cross-time industry-level mean reversion effects. The fixed-effect model also does not seem to be able to handle the age bracket issue discussed above. AI has affected many individual jobs in just the short ~2.5 years of the post-ChatGPT world. However, any analysis trying to look at this at macro-level must recognize the odd state of the post-COVID world in November 2022. Drops in high β roles can look like mean reversion paired with broader economic uncertainty in 2025. Likely any macro-level changes will take a few more years to be able to cleanly distinguish. All clear? This paper notes a metaphorical “canary in the coal mine” of falling youth employment in certain areas as a warning of what’s to come. It seems clear many AI at-risk jobs are not briskly hiring, but confounders might make it less clear if the “gas in the mine" is from AI. However, we should be cognizant that even if there is no gas now, the canary might be right in the end. Afterall, the town is investing billions to fill the mine to the brim with dynamite with mostly some hard hats and a motivational poster to keep workers safe. Continued vigilance and action is needed. Limitations: This exploration was fairly rough, but hopefully builds better understanding of a working paper that has been reported extensively in the press and social media. A stronger version of this article would sort through the β quintiles, and more fully recreate the work with CPS data and better discuss other data sources and research, but that is left to future work. These two factors seem important, but I’ll emphasize though, I’m a computer scientist, not an economist. Brynjolfsson et al. have vastly more background here. Last week (Aug 28) I emailed them with these questions, but haven’t heard back yet (it admittedly was a complicated email to reply to). I hope this prompts better discussion of the age bracketing and potential mean reversion effects in future work in this important area.

Paper Summary

Influence of Age Brackets and Natural Aging

The Effects of Overhiring and Mean Reversion?

Conclusion